MSc thesis project proposal

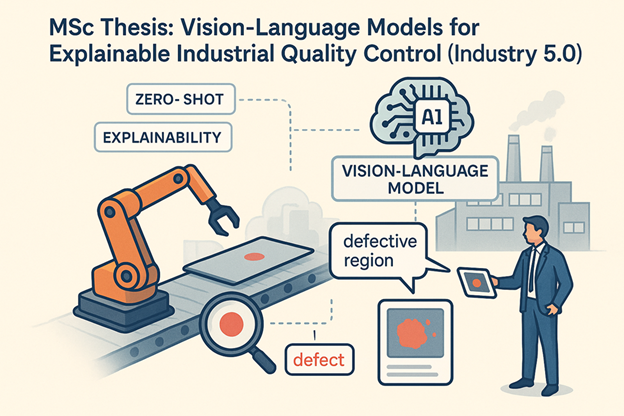

Vision–Language Models for Explainable Industrial Quality Control

Join us to build anomaly detectors that not only find defects on the factory line but also explain them in language that operators and auditors can trust. This thesis explores how modern vision–language models can make visual inspection more transparent, adaptable, and human-centric, addressing a core Industry 5.0 challenge.

You will develop an explainable anomaly detection pipeline that uses vision–language models to produce semantic rationales, zero or few shot adaptability, and conversational refinement. You will benchmark state of the art approaches against strong unsupervised baselines on established datasets such as MVTec AD and VisA, and you will evaluate detection and localisation with AUROC and PRO while also assessing grounding and faithfulness of explanations, latency, and privacy. The goal is a practical system that meets the runtime and privacy constraints typical of factory floors without sacrificing accuracy.

Assignment

The research will investigate how close zero and few shot methods can get to top classical anomaly detectors on real benchmarks, and in which settings they outperform them through superior data efficiency, faster ramp up on new product lines, or clearer explainability. It will also examine whether combined textual and visual rationales can improve trust and auditability without degrading accuracy or latency, and which prompting or adaptation strategies, such as curated prompt libraries, few shot tuning, or lightweight fine tuning, remain robust under domain shift.

By the end of the project you will have strengthened your skills in multimodal machine learning, open vocabulary detection and grounding, prompting and adaptation, human in the loop evaluation, and deployable ML for edge or line side environments. Your deliverables will include a reproducible codebase, an interactive demo with explainable outputs, a thesis, and a paper style report.

This project is conducted in co-supervision by Prof. Justin Dauwels, Amir Ghorbani Ghezeljehmeidan and Prof. Willem Van Driel.

Requirements

We welcome applicants who enjoy imagine analysis, computer vision or machine learning (ML) and have solid ML fundamentals. Prior computer vision or NLP projects are a plus but not required.

If this sounds like you, send a short note about your background and links to any relevant work, and we will get back to you asap.

Contact

dr.ir. Justin Dauwels

Signal Processing Systems Group

Department of Microelectronics

Last modified: 2025-08-17